Following last week’s trio of AI releases, Alibaba has unveiled another groundbreaking open-source model: the cinematic video generation model Tongyi Wanxiang Wan2.2, optimized for AI video generation and AI video generator applications. Wan2.2 integrates three core cinematic aesthetic elements—lighting, color, and camera language—into the model, offering over 60 intuitive, controllable parameters to significantly enhance the efficiency of producing movie-quality visuals.

Currently, the model can generate 5-second high-definition videos in a single run, with users able to create short films through multi-round prompts. In the future, Tongyi Wanxiang aims to extend the duration of single video generations, making AI video creation even more efficient.

Wan2.2 introduces three open-source models: Text-to-Video (Wan2.2-T2V-A14B), Image-to-Video (Wan2.2-I2V-A14B), and Unified Video Generation (Wan2.2-TI2V-5B). The Text-to-Video and Image-to-Video models are the industry’s first to leverage the Mixture of Experts (MoE) architecture for AI video generation, with a total of 27 billion parameters and 14 billion active parameters. These models consist of high-noise and low-noise expert models, handling overall video layout and fine details, respectively. This approach reduces computational resource consumption by approximately 50% compared to models of similar scale, effectively addressing the issue of excessive token processing in AI video generators. It also achieves significant improvements in complex motion generation, character interactions, aesthetic expression, and dynamic scenes.

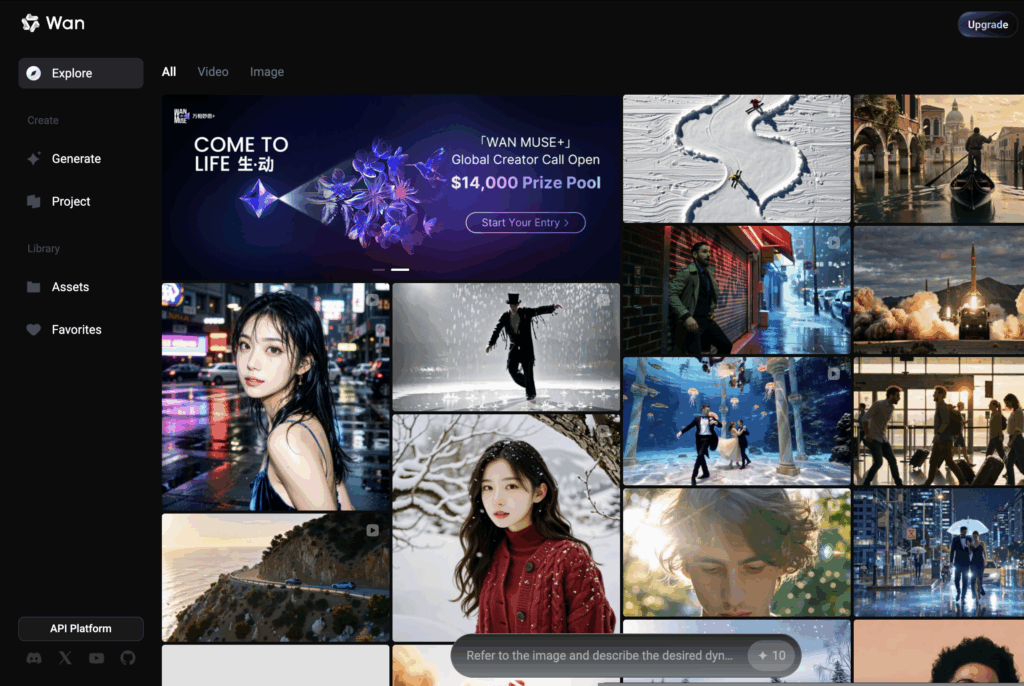

Moreover, Wan2.2 pioneers a cinematic aesthetic control system, delivering professional-grade capabilities in lighting, color, composition, and micro-expressions. For instance, by inputting keywords like “twilight,” “soft light,” “rim light,” “warm tones,” or “centered composition,” the model can automatically generate romantic scenes with golden sunset hues. Alternatively, combining “cool tones,” “hard light,” “balanced composition,” and “low angle” produces visuals akin to sci-fi films, showcasing its versatility for AI video creation and AI video generation tasks.